In 1839, when the first photographs were shown in Paris, people didn’t believe them. Daguerreotypes, as they were called, were silver plates etched with images that seemed too sharp, real, and sudden to trust. Some thought they were optical tricks. Others claimed they were frauds. Painters, whose skill had always been judged in brush strokes and draft sketches, scoffed at the idea that a device could make something so lifelike in an instant.

The unease wasn’t really about what the photographs showed. It was about how they were made. A painting revealed its effort, layers of pigment, hours and days of brushwork, the faint outline of mistakes beneath the final coat. A photograph revealed none of that. It appeared in an instant, without scars of the process. Some admired it. Many doubted it.

Nearly two centuries later, the same unease has returned, and only sharper. This time, it isn’t about photographs. It’s about AI trust and authenticity.

Earlier this year (2025), a PhD student in health economics at the University of Minnesota was expelled after being accused of using AI during a crucial exam. He had taken the test remotely while traveling in Morocco. It was an eight-hour ordeal, graded by four professors, and the rules were clear: books and notes were allowed, but AI was not.

When the results came back, the professors claimed the answers didn’t sound like the student. They pointed to phrasing, to structure, even to an acronym, “PCO”, that struck them as suspicious. On that basis, the university expelled him.

The expulsion cost the student his degree, his visa, and his academic future. His advisor called it “a death penalty.” He is now suing the university, arguing defamation and discrimination, but the verdict of suspicion has already marked him, and perhaps forever.

And he won’t be the last. Writers, designers, musicians, even ordinary people talking online, now live with the chance that their work, or their voice, will be dismissed on suspicion alone. Nothing needs to be proven. Doubt is no longer rare. It’s automatic.

Synthetic Suspicion: The New Reflex Against AI-Generated Work

Synthetic Suspicion is the reflexive doubt that something you see, read, or hear was generated by AI, even if it wasn’t.

It has two triggers:

- Polish-triggered suspicion. Work that feels too perfect, too smooth, too flawless. An essay with every edge sanded down. An illustration with no brushstrokes. A demo track too glossy to be a garage band.

- Pattern-triggered suspicion. Work that carries the fingerprints of a machine, even when imperfect. The clipped rhythm. The symmetrical phrasing. The parade of em dashes. A line like “It didn’t whisper. It roared.” might just be a writer reaching for drama, but our brains, trained on GPT cadences, flag it anyway.

Both triggers lead to the same place: the reflexive doubt that what looks human may not be.

And the doubt won’t stop with text, images, or music. It keeps widening. Today we wonder if an essay is AI. Tomorrow we’ll wonder if the person on the other end of a chat — or the voice on the other end of a call — is real at all. The em dash in that sentence may already have set off your alarms.

Suspicion spreads faster than trust.

It doesn’t matter whether the suspicion is true. Once it’s there, it colors everything: the work, the creator, even the trust between two people.

Why Our Brains Suspect AI: Pattern Recognition and Survival Instincts

Humans are pattern-recognition machines. It’s what keeps us alive. We don’t just like to observe. We enjoy predicting and inferring. A rustle in the grass could be the wind. Or it could be a snake. The instinct to assume snake is baked into us. And for good reason.

For most of human history, a false positive was cheap. You jumped, your heart raced, you looked silly, and you moved on. A false negative could be fatal. Miss the snake once, and you might not get another chance. So our brains evolved to over-detect, to assume danger even when the evidence was thin. Better safe than sorry wasn’t just a proverb. It’s a framework for human survival.

That instinct hasn’t changed. But the environment has. Today, the snake in the grass looks different. Over the past two years, millions of people have spent hours reading, skimming, and scrolling through AI-generated content. Quietly, our brains have been training themselves to spot its fingerprints: the clipped rhythm, the symmetrical phrasing, the em dash parade. None of these is proof. But once you’ve seen them often enough, the flag goes up anyway.

It’s the same mechanism that lets you recognize a Taylor Swift song before the chorus. Familiarity sharpens suspicion. Repetition makes the pattern too easy to spot, even when it isn’t there.

That’s what makes Synthetic Suspicion so slippery. Sometimes it’s accurate. Often it’s a false positive. But once the marker is visible, it can’t be unseen. And once it’s there, it changes how entire fields of work are judged.

Which is why history keeps repeating itself.

Echoes from History: Photography, Phonographs, and Today’s AI Doubts

In 1906, the American composer John Philip Sousa testified before Congress that the phonograph would ruin music. He called recorded songs “canned music,” and warned that if people listened to machines instead of live musicians, human creativity would wither. Families would stop gathering to sing together, he said. Children would grow up unable to produce music on their own.

Sousa wasn’t entirely wrong. Record players changed music. The industry bent around them. But the suspicion wasn’t really about the sound. It was about the process. Live music showed its effort, the tuning of instruments, the breath of singers, and the mistakes that proved it was real. A record revealed none of that.

That same reflex surfaced every time a new media innovation appeared.

When photography arrived in the 1800s, early viewers called the images trickery, and painters insisted they weren’t real art. In the 1900s, critics argued that phonographs compromised authentic performance by failing to capture the proof of effort that a live stage could. In the 1990s, “That must be Photoshopped” became the default suspicion whenever an image looked too perfect. In the 2000s, teachers dismissed student essays as “probably copy-paste” from Wikipedia.

The inventions change. The reflex doesn’t. Every era invents a new way to doubt what it has just created.

Why AI Suspicion Matters Today: Trust, Authenticity, and Proof of Effort

What makes AI different is scale. Photography questioned pictures. Phonographs questioned music. Photoshop questioned images. Wikipedia questioned facts. AI questions everything at once. Writing, images, voices, even people. Suspicion that once stayed contained now spills everywhere.

Content authenticity and provenance now sit at the heart of credibility. Fakery is fast. Proof is slow. And that changes the balance of trust.

Markets of trust bend under suspicion. The safer, plainer, more mediocre work often feels more believable than the spectacular, because the spectacular now risks looking synthetic.

The odd thing about Synthetic Suspicion is that it punishes the honest more than the dishonest. An AI model never worries about being accused of looking human. That’s the expectation. A human can lose everything from a single doubt. It doesn’t punish fakery. It punishes effort.

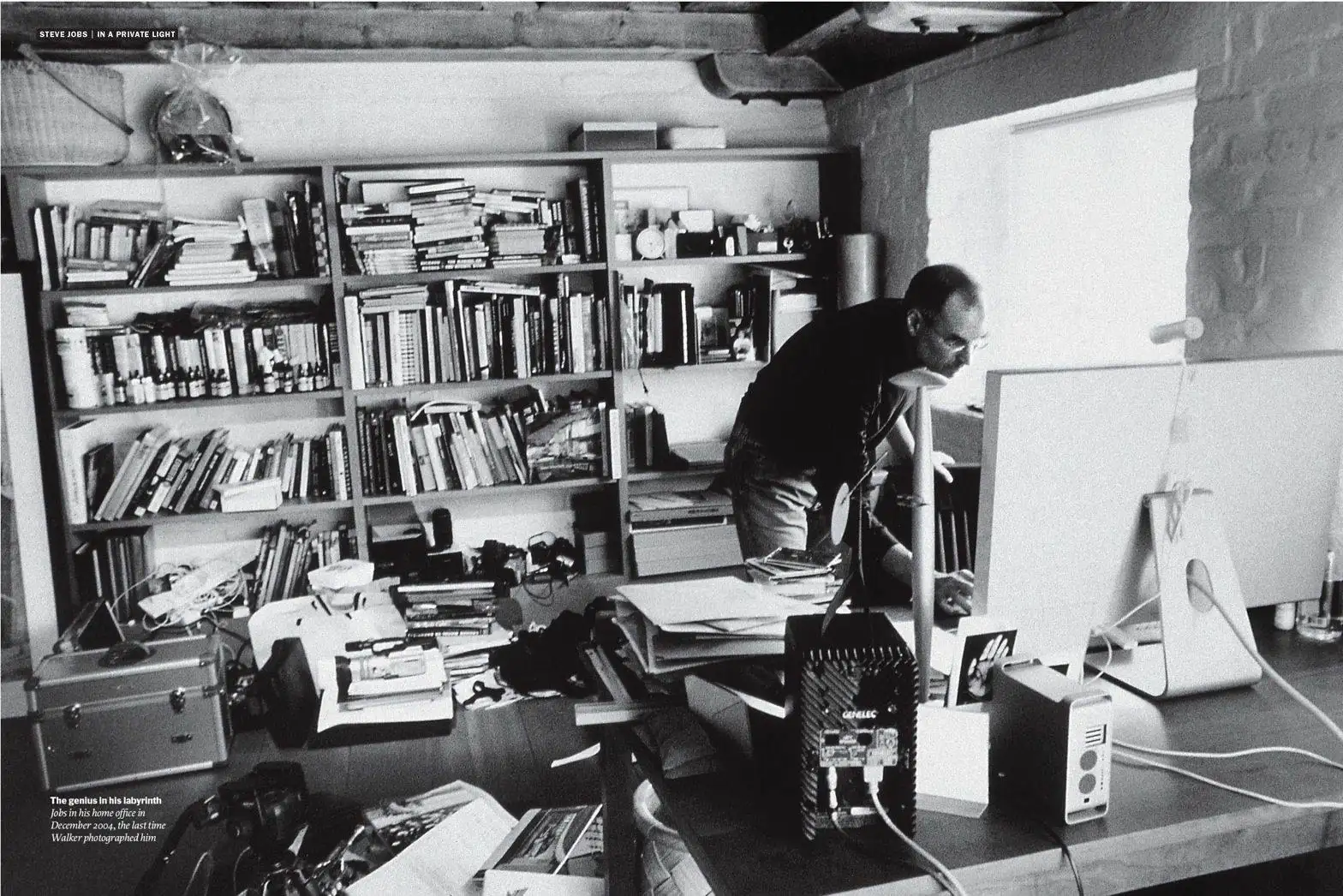

For most of history, the challenge was making something worth attention, work so striking that people couldn’t help but share it. Today, the challenge is different. It isn’t creating what’s remarkable. It’s proving you made it, and not the algorithm. Yesterday’s prize is today’s warning sign.

Perfection used to be the goal. Now it’s the red flag. A flawless finish isn’t reassurance anymore. It invites doubt and disbelief.

So the scars of work become the signal. Drafts. Versions. False starts. Commit logs. The messy trail of human work may outshine the polished result. In a world of endless outputs, it’s process, not polish, that earns belief.

Just as food learned to sell itself with the word “organic,” creative work may learn to sell itself with the word “human.” Labels, audits, and provenance may become part of how we consume not just food, but stories, songs, and even conversations.

Artificiality Bias: When AI Doubt Becomes Human Bias

Suspicion is a reflex. Bias is what it leaves behind.

Synthetic Suspicion is the gut-level doubt. Artificiality Bias is the tilt in judgment that follows when doubt doesn’t just appear but shapes how we treat the work and the person behind it.

The cost falls hardest on the honest. A student’s voice can be mistaken for ChatGPT. A designer’s crisp edge for MidJourney. A musician’s clean mix for software.

Bias bends the balance. It makes the mediocre safer than the spectacular, because the spectacular now looks suspicious. Mistakes feel safer than polish.

The psychology isn’t new. People fear being duped more than they enjoy being amazed. A single false suspicion can outweigh a hundred honest efforts. Trust erodes faster than it builds.

Artificiality Bias doesn’t just misjudge machines. It misjudges humans. It rewrites incentives: polish is punished, process is prized, and authenticity is no longer assumed.

The Professions of Trust in the AI Era

And this shift won’t just reshape how we judge work. It will reshape work itself. Entire professions may rise around proving authenticity.

- Auditors of the Human. Just as accountants certify books and regulators audit emissions, we may need professionals who verify that a person genuinely created an essay, illustration, or song. Authenticity audits could become as routine as financial audits.

- Proof-of-Process Platforms. Today, GitHub is used to show a trail of code. Tomorrow, writers, designers, and musicians may need equivalent platforms that log drafts, edits, and versions as part of the final product. A résumé might include not just what you've accomplished, but how visibly you've made it happen.

- Trust Labels. Just as food rebranded itself with “organic” and “fair trade,” creative work could be sold with “human-made” as a mark of value. Labels and watermarks may travel with content the way nutrition labels travel with food. Consumers won’t just want a song or an essay. They’ll want proof that it wasn’t born in silicon.

- Process as Luxury. The very thing we once tried to hide, mistakes, drafts, the labor behind the work, could become a status symbol. In a world where flawless outputs are free, it’s the messy trail of human effort that becomes scarce. People may pay more to see the brush strokes than the polished canvas.

- New Careers. From “provenance engineers” who build cryptographic proof trails, to curators who specialize in certifying human authorship, the job market itself will tilt. A future novelist may need not just an editor and a publicist, but an authenticator.

- Content Credentials & Watermarking. Expect wider use of content credentials, watermarking, and provenance logs so authenticity can be verified without guesswork.

- AI Directors. Just as cinema needed directors to guide actors, AI will need directors to guide models. Their job won’t be typing one prompt, but shaping hundreds of micro-decisions: what to keep, what to discard, how to blend human input with machine rhythm. Judgment becomes a skill.

- Conductors of Systems. As text, image, audio, and video models merge, another role emerges: the conductor. Someone who can orchestrate different AI models like instruments in a symphony, making sure they work in harmony instead of dissonance.

- Taste as Skill. When AI makes competence abundant, the scarce thing is taste, knowing what not to generate, when to stop polishing, and when to leave a human imperfection. Taste becomes the differentiator. Restraint becomes a form of authorship.

Public transparency reports and visible audit trails will matter as much as the final file.

The irony is striking. AI was built to free us from effort. But its byproduct may be a new economy devoted entirely to proving effort, and a new art devoted to directing it.

Synthetic Suspicion is the reflex. Artificiality Bias is the behavior it creates. Together, they explain the new doubt that shadows everything we see, read, and hear.

Authenticity was once assumed. From here on, it will have to be demonstrated. For centuries, doubt was the exception. In the age of AI, it becomes the default.

In this new world, doubt has two triggers: perfection and pattern. Together, they form the reflex of our time: Synthetic Suspicion.

Frequently Asked Questions About Synthetic Suspicion and AI Trust

What is Synthetic Suspicion?

Synthetic Suspicion is the gut-level doubt that something was AI-generated, even when it wasn’t. It’s often triggered by work that feels too polished or that carries machine-like rhythms and patterns we’ve subconsciously learned to spot.

What is Artificiality Bias?

Artificiality Bias is what follows suspicion: the tendency to judge polished, spectacular work as less trustworthy than messy or mediocre work. In the AI era, this bias punishes effort, and people feel safer trusting imperfections.

Why do humans suspect AI content so quickly?

Our brains evolved to over-detect patterns as a survival instinct. After repeated exposure to AI-generated text, images, and music, we now recognize machine fingerprints like clipped cadence, symmetry, predictable phrasing, even when they aren’t really there.

How can creators prove their work is human-made?

By showing their process. Drafts, edits, commit logs, or even cryptographic proof can serve as evidence of human effort. In the near future, we may see “human-made” labels on creative work, just like food carries “organic” or “fair trade.”

Why does AI suspicion matter for trust?

Because it flips the balance. For centuries, authenticity was assumed, and fakery was the exception. With AI, doubt has become the default. That shift doesn’t just affect how we see content, but it reshapes how we see the people behind it.

How do deepfakes change AI trust?

Deepfakes amplify Synthetic Suspicion by making visual and audio evidence contestable. Detection helps, but day-to-day credibility will depend more on provenance, content credentials, and authenticity verification than gut feel.